NASAのPerseverance ローバーのナビゲーション・カメラからの画像は、まずCOLMAPで処理を行なった:これには、Structure-from-Motion技術を用いて、各画像を撮影したカメラの相対的なポーズを計算した (https://colmap.github.io/)。

次に、NVIDIAのinstant-ngpフレームワーク (https://github.com/NVlabs/instant-ngp/) を使用して、画像とそのポーズをNeRFの学習に使用した。

この時点で、上記の火星探査機からのトレーニング画像と比較して、新しい視点を生成することができた。

特に、NeRFが大きな岩、砂州、小石といった非常に異なるテクスチャを適切に自動的にキャプチャしていることが注目する点である。

このプロセスを拡張し、スケールを拡大し、解像度を向上させるための重要な作業が残っているが、この大まかな概念実証は、火星探査機からの生の画像データを使ったこの3D再構成アプローチの実行可能性を示している。

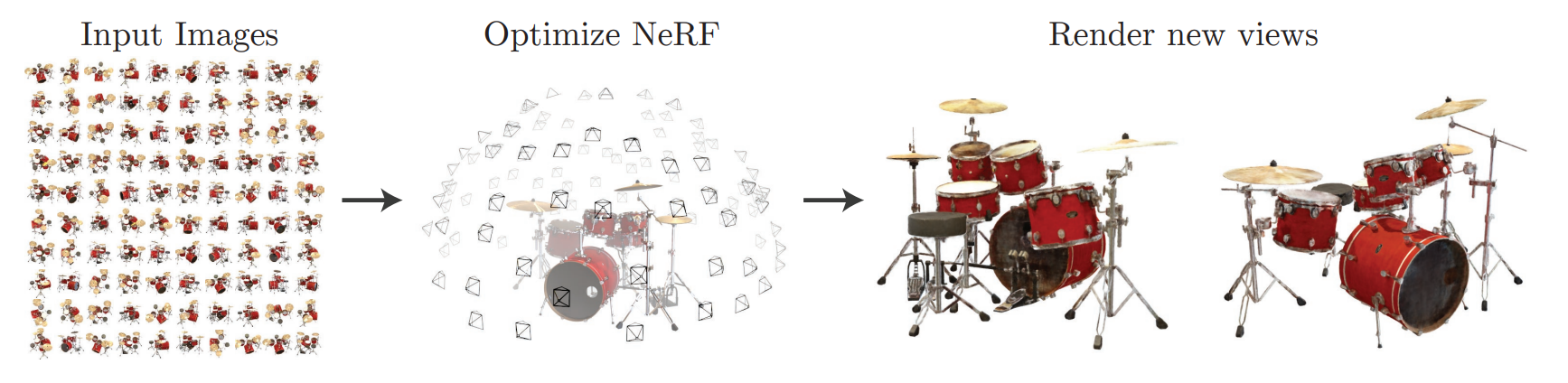

Using NeRF for Simulation:

3D simulation environments are enablers of robotics development from autonomous driving to warehouse robotics and space exploration applications. They enable iterative development, testing and validation before and in parallel to deploying robots in the real world which would otherwise be more costly, time consuming, error-prone and sometimes dangerous. However, creating high-fidelity and large-scale 3D simulation environments is still challenging. In particular, traditional methods must model the shape of every individual asset and then add-on textures and material effects using secondary processes (e.g Physic Based Rendering materials). These processes are time intensive, difficult to scale and struggle to render the complexities and the diversity the world.

On the other hand, a new paradigm for 3D simulation is emerging: directly using camera images of a scene to reconstruct the 3D environment. In particular, Neural Radiance Fields (NeRF) only need to be trained with a sequence of images associated with their position and direction to be able to render new views of the scene from any direction. The 3D reconstruction operates directly on radiance fields to learn the shapes, textures and complex material effects automatically.

[1]

[1]

Using NeRF-based Simulation for Planetary Exploration Robots:

3D simulations for planetary exploration missions such as robots to the Moon and Mars surface or spacecraft orbiting comets and asteroids are notoriously difficult to create due the unstructured nature of the environments, the diversity of materials and the specific lighting effects. However, recent missions have started sending back large quantities of images from these new worlds: a treasure trove of data to create simulated environments.

Asteria ART has performed a proof-of-concept test using the stereo camera images from NASA's Perseverance rover on Mars to show the potential for recreating 3D planetary surfaces.

[2]

[2]

- 28 individual image frames were extracted from the video of the Perseverance rover driving on Mars shared by NASA shown above ([2])

- The position and the direction of the camera taking each image was computed relative to each other using the Structure from Motion algorithm COLMAP [3]

- The NeRF was trained using the Instant-NGP framework [4]

- 180 novel views were generated and assembled together to create the fly-through sequence presented at the top of this post (see a few keyframes below as well)

The newly generated views from the trained NeRF properly capture the very different textures in the scene automatically: large rocks, sand bars, pebbles, etc. The overlap between these features and their distribution is physically accurate and matches the input images.

New camera views can be generated from any position and direction: this opens the way to creating a simulation environment where a rover can drive any path (different than the one the Perseverance rover drove to capture the environment) and simulated rover camera images can be simulated. This capability enables the development of vision-based rover navigation algorithm and autonomous driving applications.

However, in this limited proof-of-concept, the horizon and sky are blurry and present ghost-like features: this is mainly due to the limited amount of training data (just 28 images!) and the assumptions within the Instant-NGP framework used.

Future Work:

NeRF-based 3D reconstruction shows incredible promise in representing 3D data more efficiently and seems ready to unlock new ways to generate highly realistic 3D objects in a more cost and time efficient way. However, significant work remains to improve this proof-of-concept into a large-scale and high-fidelity simulation of a planetary surface that can be used to support rover and spacecraft development and operations. Asteria ART engineers are tackling this project.

Furthermore, other datasets from other planetary exploration missions could also be used to create novel 3D surface reconstructions:

-

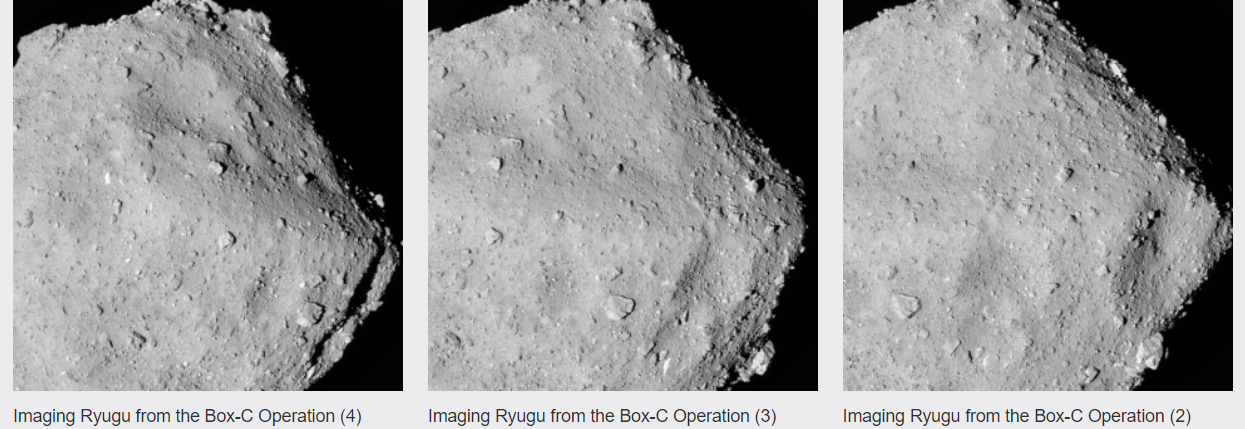

JAXA Hayabusa spacecraft imaging the Ryugu Asteroid as it orbits aroud it

[5] -

ISRO Chandrayaan 3 Pragyan rover surface image sequences

.jpg)

.jpg)

.jpg)

.png)

[6]

The same technology developed for the 3D reconstruction of planetary surfaces can also be applied to terrestrial applications such as indoor and outdoor spaces :

- warehouse, office or restaurant

- construction site, national park or natural disaster area

- entire city

Credits:

[1] Mildenhall, Ben, et al. "Nerf: Representing scenes as neural radiance fields for view synthesis." Communications of the ACM 65.1 (2021) (available online)

[2] Credit NASA. Perseverance's First Autonav Drive, July 1st, 2021. (Source)

[3] Schonberger, Johannes L., and Jan-Michael Frahm. "Structure-from-motion revisited." Proceedings of the IEEE conference on computer vision and pattern recognition. (2016) (available online)

[4] Müller, Thomas, et al. "Instant neural graphics primitives with a multiresolution hash encoding." ACM Transactions on Graphics (ToG) 41.4 (2022) (available online)

[5] Credit JAXA/ISAS. Image Gallery Feature Ryugu. (available online)

[6] Credit ISRO. Chandrayaan 3 Image Gallery. (available online)